Event streaming and event sourcing are two related but distinct concepts in the world of event-driven architecture.

Event streaming is the process of continuously capturing and storing events as they occur in a system. These events can then be processed and analyzed in real-time or stored for later analysis. Event streaming is often used in systems that require real-time processing of large volumes of data, such as financial trading systems or social media platforms.

Here’s a simple example of event streaming in Go using the popular Kafka messaging system:

package main

import (

"context"

"fmt"

"github.com/segmentio/kafka-go"

)

func main() {

// Set up a Kafka producer to send events to a topic

writer := kafka.NewWriter(kafka.WriterConfig{

Brokers: []string{"localhost:9092"},

Topic: "my-topic",

})

// Send some events to the topic

writer.WriteMessages(context.Background(),

kafka.Message{

Key: []byte("key1"),

Value: []byte("value1"),

},

kafka.Message{

Key: []byte("key2"),

Value: []byte("value2"),

},

)

// Set up a Kafka consumer to read events from the topic

reader := kafka.NewReader(kafka.ReaderConfig{

Brokers: []string{"localhost:9092"},

Topic: "my-topic",

})

// Read events from the topic

for {

msg, err := reader.ReadMessage(context.Background())

if err != nil {

break

}

fmt.Printf("Received message: key=%s, value=%s\n", string(msg.Key), string(msg.Value))

}

}

Event sourcing, on the other hand, is a pattern for building systems that store all changes to the state of an application as a sequence of events. These events can then be used to reconstruct the state of the application at any point in time. Event sourcing is often used in systems that require auditability, traceability, or compliance, such as financial systems or healthcare systems.

Here’s a simple example of event sourcing in Go using an in-memory event store:

package main

import (

"fmt"

)

type Event struct {

Type string

Data interface{}

}

type EventStore struct {

events []Event

}

func (store *EventStore) Append(event Event) {

store.events = append(store.events, event)

}

func (store *EventStore) GetEvents() []Event {

return store.events

}

type Account struct {

id string

balance int

store *EventStore

}

func NewAccount(id string, store *EventStore) *Account {

return &Account{

id: id,

balance: 0,

store: store,

}

}

func (account *Account) Deposit(amount int) {

event := Event{

Type: "deposit",

Data: amount,

}

account.store.Append(event)

account.balance += amount

}

func (account *Account) Withdraw(amount int) {

if account.balance >= amount {

event := Event{

Type: "withdraw",

Data: amount,

}

account.store.Append(event)

account.balance -= amount

}

}

func (account *Account) GetBalance() int {

return account.balance

}

func main() {

store := &EventStore{}

account := NewAccount("123", store)

account.Deposit(100)

account.Withdraw(50)

account.Deposit(25)

events := store.GetEvents()

for _, event := range events {

switch event.Type {

case "deposit":

amount := event.Data.(int)

fmt.Printf("Deposited %d\n", amount)

case "withdraw":

amount := event.Data.(int)

fmt.Printf("Withdrew %d\n", amount)

}

}

fmt.Printf("Final balance: %d\n", account.GetBalance())

}

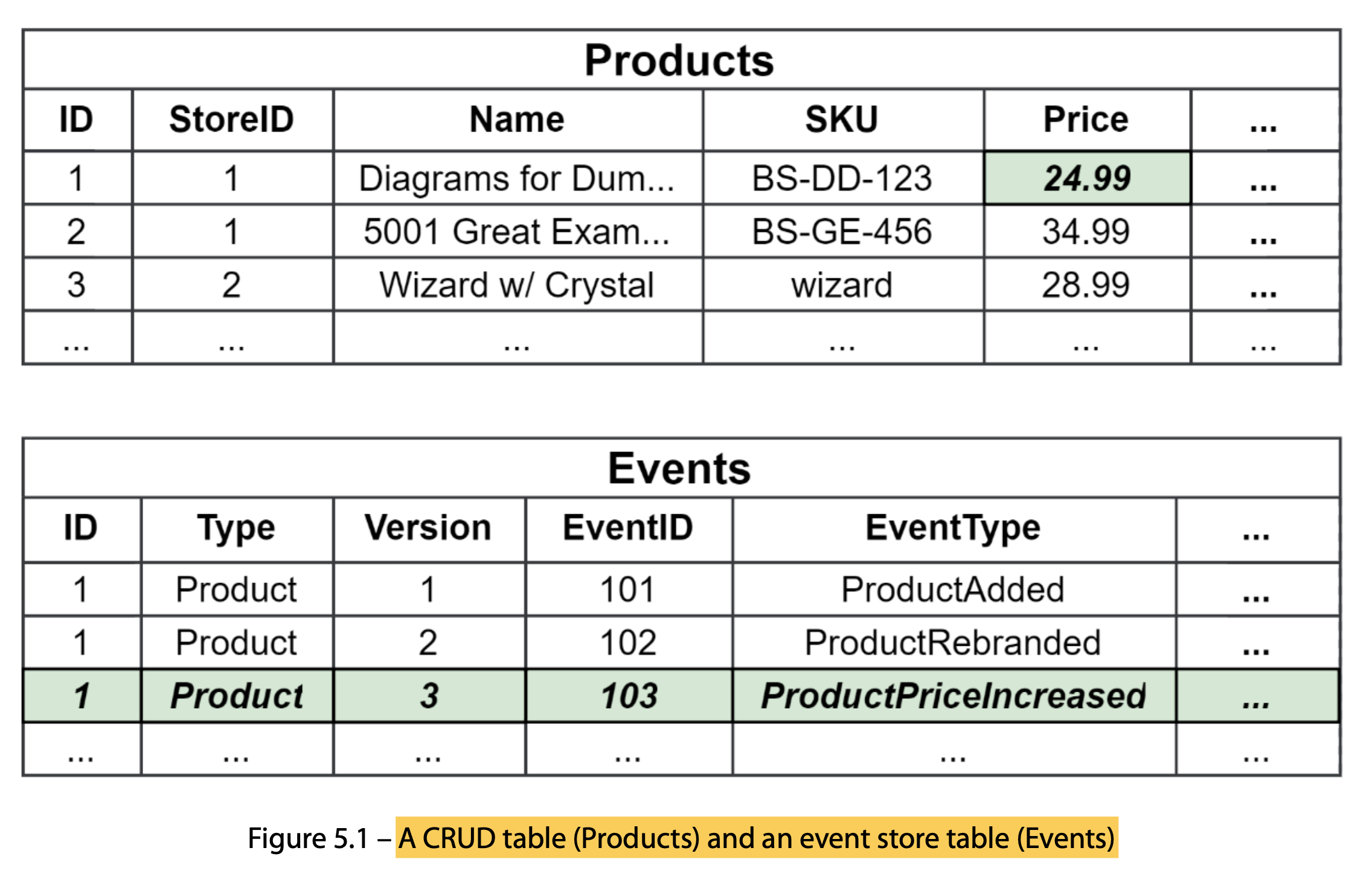

Event sourcing is a method for logging every alteration made to an aggregate by appending it to a continuous stream. To reconstruct the ultimate state of an aggregate, it’s necessary to read these events in sequence and then apply them to the aggregate. This stands in contrast to the immediate modifications performed in a create, read, update, and delete (CRUD) system. In a CRUD system, any changes to a record’s state are stored in a database, effectively overwriting the previous version of the same aggregate.

Once the price change has been saved to the Products table, only the price itself is updated, while the rest of the row remains unchanged. However, as illustrated in Figure 5.1, this approach leads to the loss of both the previous price and the context behind the change.

To preserve not only the new price but also crucial metadata, such as the reason for the adjustment, the change is recorded as an event in the Events table. The previous price remains intact within a prior event, allowing retrieval if needed.

For effective event sourcing implementations, it’s advisable to utilize event stores that offer robust consistency assurances and employ optimistic concurrency control. In practice, this means that when multiple alterations occur concurrently, only the initial modification can append events to the stream. Subsequent modifications may need to be retried or may fail outright.